Data API best practices

This article covers best practices for using the data API in Betty Blocks to create scalable and well-structured applications.

A clean and properly set database is crucial for stable and fast application performance and integrations, allowing external systems to work with Betty Blocks. On the other hand, poor data structures can lead to issues like empty values, duplication, or missing properties, which affect both functionality and user experience.

Following these recommended practices can prevent common data issues and keep your applications organized and reliable.

Optimized relations

Complex relationships between models can slow down query performance. Best practices include:

-

Limit relations: Limited the number of relations between models (preferably, up to 5). For example, if you have an Order model, it may relate to Customer, Product, and Shipping models, but avoid adding excessive connections beyond this.

-

Bridge models: For more complex relationships, use bridge models instead of relying solely on 'Has and belongs to many' relations. For instance, an order may relate to multiple products, but to capture the quantity of each product, you would create a bridge model, like OrderLine, to store additional details.

Querying

-

Using indexes: Indexes improve performance when filtering or sorting large datasets. If you’re sorting a list of customer names alphabetically, an index on the Name column would make this operation faster (but mind that it might as well slow down the creation of new records).

Note: Avoid over-indexing and only create indexes for fields that are frequently queried, as too many indexes can degrade performance when adding or updating records.

-

Filtering on nested relations, especially with OR conditions, can significantly impact performance. Queries involving multiple relationship layers require extra processing, and OR conditions add complexity by forcing the system to check multiple paths, leading to slower response times.

-

Avoid filtering (or sorting) on properties with large amounts of data, such as Multi-line text fields or Rich text fields. These types require more resources to process, leading to slower query performance.

-

Avoid querying unnecessary relationships: When dealing with data that has multiple relationships (e.g., customers and their orders), avoid querying all related objects unless necessary. This can significantly increase response times and put additional strain on your server.

Model complexity and property limits

To avoid performance bottlenecks, you should also manage the complexity of your data models:

-

Choose the appropriate property types: Use more complex property types, such as Rich text, only when necessary. For example, if you only need to store a short text, use simple Text (single-line) instead of Rich text to avoid unnecessary overhead.

-

Limit property сount: Too many properties in a single model can indicate an overly complex structure, which may lead to performance issues. If you find your model getting bloated with excessive properties, consider splitting it into multiple related models.

-

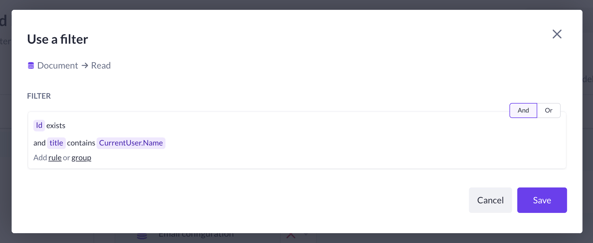

Permission levels: Each model permission filter is additional to any query on a specific model. Even though there is no particular limit on the number of filters used, your application performance is impacted by filters based on relationships. Filters that rely on related data tend to slow down queries.

-

Avoid duplications: Ensure there are no redundant columns in your data tables or properties in your models. Extra columns can lead to unnecessary data duplication and storage overhead.

Validations and dependencies

Validations ensure data integrity by enforcing specific formats and rules:

-

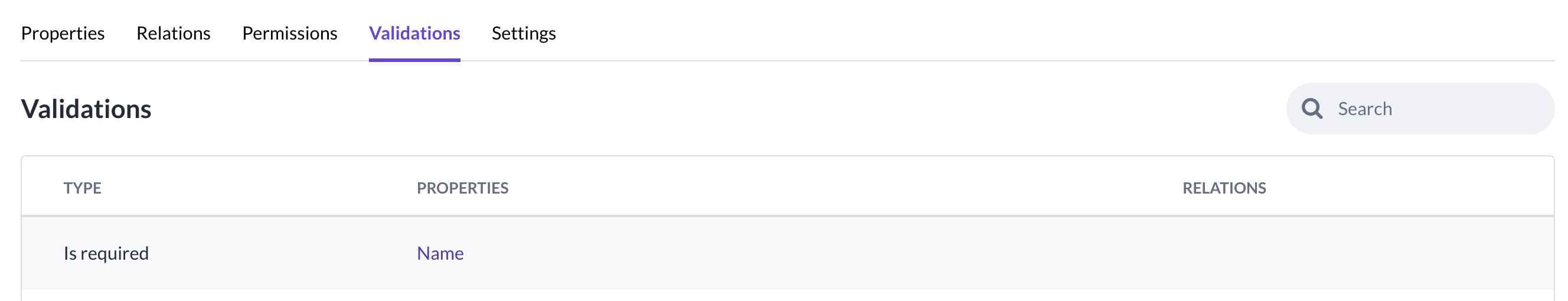

Set validations: Use the Validations tab to review and enforce rules like Is required or specific input formats (e.g., email addresses, phone numbers). Make sure that important fields such as First name and Last name are marked as required.

-

Make at least one property per model as required to prevent the application from creating empty records

-

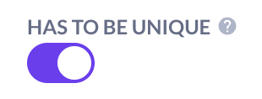

Marking at least one property per model as Has to be unique to avoid creating a duplicated record

-

Set validations: Use the Validations tab to review and enforce rules like Is required or specific input formats (e.g., email addresses, phone numbers). Make sure that important fields such as First name and Last name are marked as required.

-

Make at least one property per model as required to prevent the application from creating empty records

-

Marking at least one property per model as Has to be unique to avoid creating a duplicated record

-

Edit existing validations: Validations can be easily modified based on new requirements. For instance, if a field that was previously optional now becomes mandatory, you can adjust the validations without changing the entire model.

-

Check dependencies: Before deleting a model or relation, ensure that it’s not being used elsewhere, such as in an action or a page. Failure to check dependencies can lead to errors after deletion.

Data normalization

Data normalization is a set of practices for avoiding redundancy and keeping a clean, maintainable structure in your database. It reduces the chances of update, insert, and delete anomalies by organizing data into related tables (related models). Here’s an example:

|

Order ID |

Customer name |

Customer address |

Product name |

Quantity |

Price |

|

1 |

Alice Smith |

123 Elm St. |

Laptop |

1 |

1200 |

|

2 |

Bob Johnson |

456 Oak Ave. |

Laptop |

2 |

1200 |

|

3 |

Alice Smith |

123 Elm St. |

Mouse |

1 |

20 |

Unnormalized table issues:

• Redundancy: Alice’s information repeats.

• Update anomaly: If Alice changes her address, it needs to be updated in multiple rows.

• Insert anomaly: You can’t add new products without creating an order.

• Delete anomaly: Deleting Bob’s order would lose his customer information.

The solution for these issues would be to structure tables into related tables:

1. Customer table/model:

|

Customer ID |

Customer name |

Customer address |

|

1 |

Alice Smith |

123 Elm St. |

|

2 |

Bob Johnson |

456 Oak Ave. |

2. Product table/model:

|

Product ID |

Customer name |

Customer address |

|

1 |

Laptop |

1200 |

|

2 |

Mouse |

20 |

3. Order table/model:

|

Order ID |

Customer ID |

Product ID |

Quantity |

|

1 |

1 |

1 |

1 |

|

2 |

2 |

1 |

2 |

|

3 |

1 |

2 |

1 |

By splitting the data into related tables/models (Customers, Products, and Orders), we eliminate redundancy, making updates easier and quicker. Additionally, insert and delete anomalies are avoided since data is structured logically.

Tip! Instead of multiple boolean flags in a model, consider using a List property to group related data. This makes it easier to manage and query. For instance, rather than adding separate flags for 'Active', 'Verified', or 'Premium' you could use a Status field with a list of values.

Importing data

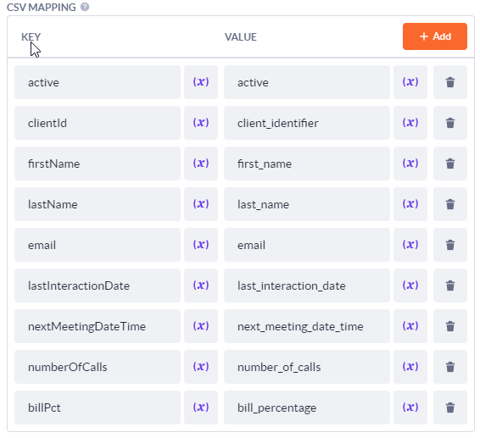

Betty Blocks doesn’t have a built-in import feature, so importing data requires creating custom import actions for each use case. One option for handling data imports is the Import from CSV/XLS(X) function block from the Block Store. This function allows you to import data from a file into your application and map its contents to your data models.

-

Column mapping: You can map columns from the import file to specific properties in your data model. This allows for flexibility when importing different datasets.

-

Handling new and updated records: You can define separate mappings for importing new records and updating existing ones. This way, updates are handled correctly without overwriting critical information.

-

Deduplication option: To avoid duplicate records, use the deduplication feature. This compares a column from your import file (such as an ID or email) with unique values in your database to make sure duplicates aren’t created.

For detailed instructions on configuring and using the Import from CSV/XLS(X) function, check out our documentation on GitHub.

Follow these best practices for the data API in Betty Blocks to keep your applications secure, fast and scalable. As for the rest - be prepared to optimize your queries, secure requests and handle errors.

Good luck!